Traditional vs AI Web Scrapers: What's the Difference? A 2024 Comparison

Web scraping isn't optional anymore. In today's data-driven business landscape, it's the invisible force separating market leaders from the pack. Companies feast on data—competitor prices, market insights, content aggregation—turning the ability to efficiently harvest web information into razor-sharp competitive edge.

The metamorphosis of web scraping tells a compelling story. Gone are the days when simple scripts and rule-based tools dominated the landscape. Now, artificial intelligence has erupted onto the scene, fundamentally transforming what's possible. This seismic shift presents decision-makers with a critical inflection point: evolve with AI-powered solutions, or maintain course with traditional methods?

The implications stretch far beyond mere technical specifications. Traditional scrapers—reliable workhorses of the data collection world—still command respect in specific domains. Yet AI-powered solutions have rewritten the rules of what's achievable in web data extraction. The gap between these approaches widens daily.

For business leaders and data professionals navigating this shifting terrain, clarity is paramount. The choice between traditional and AI-powered scraping tools can cascade through an organization, affecting everything from operational efficiency to strategic agility.

This comprehensive guide cuts through the complexity. We'll dissect both approaches, illuminating their strengths, exposing their limitations, and mapping their ideal use cases. By the final word, you'll possess the insights needed to chart your organization's course through the web scraping landscape.

Understanding Traditional Web Scrapers

Meet the veterans of web automation: traditional scrapers. These first-generation tools, born in an era of static HTML, still stand as monuments to rule-based efficiency. They're digital archaeologists, meticulously unearthing data through predefined pathways.

How Traditional Scrapers Work

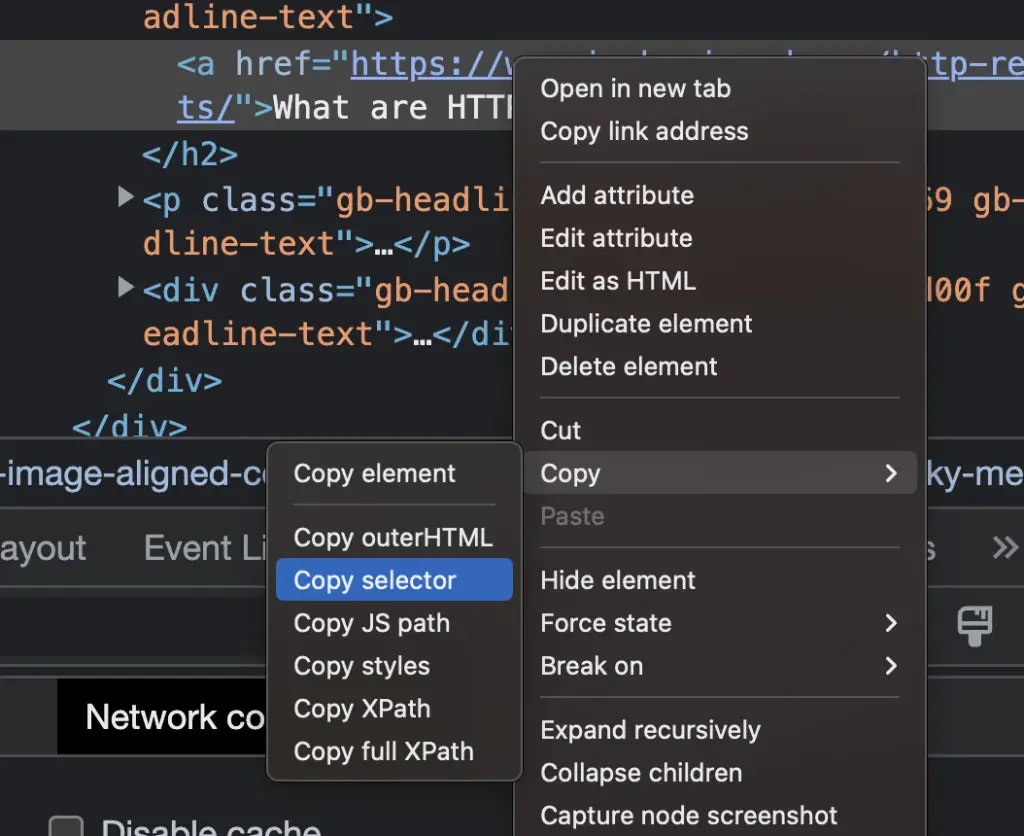

Picture a master locksmith working with mechanical precision. Traditional scrapers navigate the web's architecture through XPath and CSS selectors—digital coordinates guiding them through forests of HTML. No intuition, no adaptation; just pure, programmatic execution. These tools march through web pages like soldiers following a map, each step precisely calculated, each extraction a product of rigid instructions etched in code.

Common Use Cases

Where chaos yields to order, traditional scrapers reign supreme. Their domain is the predictable: standardized e-commerce platforms with militant consistency, database-driven websites that never stray from their templates, forms that resist the temptation of modernization. In these digital gardens of uniformity, traditional scrapers perform their symphony of extraction with masterful efficiency.

Advantages

Simplicity breeds reliability—a mantra that traditional scrapers embody perfectly. Their predictable performance feels like a steady heartbeat in a world of digital chaos. Without the computational hunger of AI, they operate with lightweight grace. When issues arise, debugging becomes a straightforward investigation rather than a complex diagnostic puzzle. Speed? They're unmatched in their element, processing structured data with the efficiency of a well-oiled machine.

Limitations

Yet every strength casts a shadow. Traditional scrapers crack like fine china when websites evolve—even minor updates can shatter their functionality. They demand technical artisans, skilled in the ancient languages of HTML and CSS, to craft and maintain their operations. Building new scrapers isn't just time-consuming; it's an exercise in digital archaeology, requiring deep excavation of website structures. Dynamic content? JavaScript rendering? These modern web standards might as well be cryptic hieroglyphs to traditional scrapers.

Think of traditional scrapers as specialized tools in a master craftsman's workshop. In their element—handling stable, structured websites—they're unmatched. But as the web evolves into an ever-shifting landscape of dynamic content and fluid designs, their limitations become impossible to ignore. They're not obsolete; they're specialized. Understanding this distinction is crucial for anyone charting their course through the waters of web automation.

The AI-Powered Revolution in Web Scraping

Welcome to the future of web scraping. AI-powered scrapers aren't just tools—they're digital organisms, evolving and adapting in real-time. While their traditional counterparts march to the beat of rigid rules, these intelligent systems dance with the dynamic web, interpreting and learning with every interaction.

How AI Scrapers Work

Forget everything you know about conventional scraping. AI scrapers think. Through the lens of Natural Language Processing, they don't just read web pages—they understand them. Pattern recognition algorithms flow through digital content like water, finding pathways that traditional scrapers never knew existed. These aren't mere tools following a map; they're explorers, charting new territories and blazing trails through the ever-shifting landscape of the modern web.

Key Capabilities

The arsenal of AI scraping is nothing short of revolutionary. Contextual understanding lets these systems grasp meaning beyond mere structure—they don't just see data, they comprehend it. Dynamic layouts that would shatter traditional scrapers become playgrounds for AI's adaptive intelligence. Watch as they piece together patterns across seemingly disparate sources, like digital detectives solving puzzles in real-time. Schema inference? It's almost magical: point them at a new data source, and they'll map its structure with uncanny precision.

Advantages

Liberation from rigid rules changes everything. Where traditional scrapers stumble, AI scrapers soar. Website updates that once triggered cascading failures now pass like gentle breezes. Natural language instructions replace intricate coding requirements—speak, and the AI listens. Edge cases that demanded hours of programming melt away under the adaptive gaze of artificial intelligence. It's not just automation; it's augmentation of human capability.

This isn't just an upgrade to web scraping—it's a paradigm shift. AI scrapers represent a fundamental reimagining of what's possible in data extraction. They bridge the gap between the web's chaos and our need for structured data, turning what was once a brittle, technical process into a fluid dialogue between human intention and machine intelligence. In a world where websites evolve daily and data presents itself in countless forms, AI scraping isn't just an alternative—it's an inevitability.

Real-World Comparison Scenarios

Picture the sprawling landscape of online retail. Traditional scrapers wade through it like soldiers following outdated maps—precise but inflexible. They march efficiently through familiar territory, extracting data with mechanical precision from static pages. Yet introduce a simple site update, and these rigid warriors stumble, their carefully plotted coordinates suddenly leading nowhere.

E-commerce Data Collection

Enter AI scrapers: digital chameleons in the e-commerce jungle. They don't just collect data; they interpret it. Product variations? They understand them intuitively, like a seasoned shopper. Site updates become mere ripples in their adaptive intelligence. Whether navigating Amazon's labyrinth or a boutique storefront, they maintain their bearing with remarkable grace, extracting meaning where traditional scrapers see only chaos.

Content Aggregation

Content—the web's endless river of articles, posts, and media—presents a different challenge entirely. Traditional scrapers approach it like accountants, meticulously checking boxes and following rigid formulas. They excel in uniformity but falter in the face of creativity. Each new layout, each embedded video, each innovative design choice becomes a potential point of failure.

AI scrapers flow through content like literary critics, understanding context and nuance. They don't just scrape; they comprehend. Headlines, body text, footnotes—all fall naturally into place, regardless of their presentation. These systems dance through diverse content formats with the ease of a seasoned journalist, extracting meaning from the maze of modern media.

Research and Analysis

In the realm of research, the contrast becomes stark. Traditional scrapers operate like diligent but literal-minded assistants, excellent at following specific instructions but blind to the bigger picture. They catalog efficiently but cannot connect dots or understand context.

AI scrapers emerge as research partners, not just tools. They piece together relationships across disparate sources, understanding context with near-human intuition. PDF documents, academic papers, diverse data formats—all become accessible through a single, intelligent interface. Where traditional scrapers see isolated data points, AI reveals patterns and connections, transforming raw information into actionable insights.

These real-world scenarios paint a vivid picture: traditional scrapers remain valuable specialists, excelling in controlled environments where precision matters more than flexibility. But AI scrapers represent a quantum leap forward—digital polymaths capable of adapting to the web's endless variations. The choice between them isn't just about capability; it's about matching tools to terrain in the ever-evolving landscape of web data.

Choosing the Right Approach

The battlefield of web scraping demands strategy, not just tools. Like a general surveying the terrain before deployment, your choice between traditional and AI-powered solutions must align with the landscape you're facing. Let's navigate this decision with precision.

When to Use Traditional Scrapers

Sometimes, the tried-and-true approach isn't just adequate—it's optimal. Traditional scrapers, these steadfast soldiers of data collection, still command respect in their specialized domains.

Simple, Stable Websites

Picture digital fortresses that never change their architecture. Here, traditional scrapers move with the precision of Swiss watches. Legacy systems stand like ancient cities, their layouts carved in digital stone. In these unchanging realms, traditional scrapers don't just perform—they excel, their very simplicity becoming their strength.

Highly Structured Data

When data marches in perfect formation—standardized tables, regimented reports, inventory lists that mirror military precision—traditional scrapers find their perfect rhythm. Like master craftsmen working with familiar materials, they extract data with unwavering accuracy and efficiency.

Performance-Critical Applications

Speed matters. In the race against time, where milliseconds separate success from failure, traditional scrapers sprint unencumbered by AI's computational overhead. They're the Olympic sprinters of the data world: specialized, streamlined, and blindingly fast when the track is straight.

When to Choose AI-Powered Solutions

The modern web isn't a garden—it's a jungle. Here's where AI scrapers emerge as digital naturalists, adapted to thrive in complexity.

Dynamic Websites

Modern websites breathe and evolve, their layouts shifting like desert sands. AI scrapers navigate this chaos with almost organic adaptability. JavaScript-heavy applications that would stymie traditional tools become playgrounds for these intelligent systems. They don't just survive change—they expect it.

Diverse Data Sources

Variety isn't just the spice of life—it's the norm of the modern web. AI scrapers float through this diversity like polyglots at an international conference, equally comfortable with structured tables or flowing narratives. They see patterns where traditional scrapers see only noise.

Non-Technical Users

Not everyone speaks the language of code. AI scrapers bridge this gap, translating human intent into digital action. Marketing teams, researchers, analysts—suddenly they're all data scientists, wielding powerful tools through intuitive interfaces. It's democratization through intelligence.

Scaling Requirements

Growth demands adaptability. As your data needs expand, AI scrapers scale not just in volume but in capability. They're not just tools; they're investments in future-proofing your data operations. Each new challenge becomes an opportunity for learning, not an obstacle to overcome.

The choice between traditional and AI-powered scraping isn't just technical—it's strategic. Consider your terrain: stable or shifting? Your troops: developers or business users? Your mission: focused or expanding? In this digital landscape, victory belongs to those who match their tools to their territory with wisdom and foresight.

Future Trends

The horizon of web scraping blazes with possibility. Like seismologists reading the earth's tremors, we can feel the vibrations of coming change—each ripple hinting at transformations that will reshape the landscape of data collection.

The Growing Role of AI in Web Scraping

Imagine intelligence that evolves as swiftly as the web itself. Tomorrow's AI scrapers won't just collect data—they'll anticipate it. Machine learning algorithms are becoming digital savants, their pattern recognition capabilities expanding like neurons forming new connections. Natural language processing grows more nuanced by the day, approaching human-level understanding with uncanny precision. These systems don't just scrape JavaScript-rendered content; they dance with it, interpreting dynamic pages with almost artistic grace.

Watch as these digital entities learn to extract not just content, but context—divining meaning from the subtle interplay of text, image, and layout. They're becoming connoisseurs of the web's rich multimedia tapestry, their understanding deepening with each interaction. Website changes? They adapt in real-time, like chameleons shifting colors. Quality assurance isn't just automated; it's intuitive.

Hybrid Approaches Emerging

The future isn't about choosing sides—it's about synthesis. Traditional and AI approaches are merging like tributaries into a mighty river. Smart fallback systems pivot seamlessly between methodologies, choosing the perfect tool for each moment. It's not just combination; it's orchestration. These hybrid systems conduct their operations with fluid grace, automated conductors selecting the perfect instrument for each digital movement.

Performance optimization becomes an art form. Speed and adaptability find their balance, not in compromise, but in harmony. Error handling evolves from simple recovery to predictive prevention. The rigid boundaries between traditional and AI approaches dissolve, revealing something new: systems that think and execute with unprecedented sophistication.

Impact of Evolving Web Technologies

The web itself is transforming. Modern applications pulse with complexity, their dynamic rendering pushing the boundaries of what's possible. Progressive web apps blur the lines between desktop and browser, while security measures rise like digital fortresses. APIs become the new bedrock, reshaping how data flows through the digital ecosystem.

Web Assembly rewrites the rules of browser capabilities, while privacy regulations cast long shadows over data collection practices. Each evolution demands response, adaptation, innovation. The tools of tomorrow must navigate this shifting maze with increasing sophistication.

This isn't just evolution—it's revolution in slow motion. Traditional scraping techniques won't vanish; they'll be absorbed, transformed, integrated into something greater. The future belongs to solutions that can think, learn, and adapt—digital organisms evolved to thrive in an ecosystem of ever-increasing complexity. In this brave new world of web scraping, the only constant is change, and the only successful strategy is continuous adaptation.

Conclusion

Standing at the crossroads of web scraping technology, we've mapped the terrain between tradition and innovation. This isn't just a choice between tools—it's a decision that echoes through the corridors of data-driven decision making, shaping how organizations interact with the digital world.

Summary of Key Differences

The landscape we've traversed reveals a stark contrast. Traditional scrapers stand like seasoned sentinels, their strength lying in precision and performance within known boundaries. They're the master craftsmen of the digital world, excelling in their workshops of structured data. Yet in the wild territories of modern web architecture, AI-powered solutions emerge as digital naturalists, adapting and thriving where rigid rules falter. Each approach carves its own niche in the ecosystem of data collection, their strengths and limitations drawing clear battle lines in the sand.

The Shift Toward AI-Powered Solutions

Watch closely—you can see the tides turning. Modern websites pulse with complexity, their structures shifting like desert dunes. In this dynamic landscape, AI solutions aren't just tools; they're evolving organisms, learning and adapting with every interaction. Non-technical users demand keys to the kingdom of data, while maintenance overhead weighs heavy on traditional approaches. The future whispers in algorithms and adapts with machine learning. It's not just a trend—it's a transformation.

Next Steps for Decision-Makers

Assess Your Current Needs Don't just look—analyze. Dive deep into your organization's digital DNA. What data flows through your veins? What expertise walks your halls? Your current position isn't just a starting point—it's the foundation of your data collection strategy. Map your territory with the precision of a cartographer; understand your landscape before choosing your path.

Plan for Future Requirements The horizon isn't static—it's alive with possibility. Tomorrow's data needs cast long shadows over today's decisions. Project not just growth, but evolution. New data sources will emerge like digital oases in the desert of information. Your team's skills will expand, and your tools must grow with them. Factor in not just costs, but capabilities.

Consider a Hybrid Approach Why choose when you can synthesize? The future may not lie in strict dichotomies but in intelligent fusion. Traditional and AI approaches can dance together, each leading where they step most surely. Pilot programs become laboratories of innovation, testing grounds where theory meets practice. Flexibility isn't just an option—it's a survival trait.

The path forward isn't marked by absolutes but by adaptation. In this ever-evolving landscape of web scraping, success belongs to those who can read the terrain and choose their tools wisely. The future beckons not to those who simply collect data, but to those who can harvest it with intelligence, efficiency, and adaptability.

The choice you make today echoes into tomorrow. Choose not just with your current needs in mind, but with an eye toward the horizon where web technologies continue to evolve and data grows ever more valuable. In the end, the most powerful tool isn't traditional or AI—it's the wisdom to know which to use, and when.